From Hype to High-Impact: How Autonomous AI is Reshaping Software in the New Compute Era

After nearly a decade investing in European AI companies, we see the market entering a critical transition — from hype to measurable value creation. Our thesis, rooted in 30 AI investments since 2015, identifies four shifts shaping tomorrow's winners:

- A radical reshaping of business models driven by compute economics, rising data center constraints, and energy prices

- Specialized and domain-specific models are displacing generic, one-size-fits-all approaches

- Autonomous AI agents are reshaping how enterprises operate

- Complex regulatory environments acting as both barriers and catalysts

This document provides practical guidance for founders across the AI stack, highlighting where European founders can turn structural headwinds into an advantage.

Welcome to the AI Gold Rush—But Who Strikes Gold?

AI is evolving from a Big Tech playground into the operating system of modern enterprises. While capital is plentiful, only a select few will translate AI hype into sustainable value. More than $60 billion of venture funding flowed into AI startups in 2024, surpassing fintech and SaaS. Enterprises are embedding AI in revenue-critical workflows.

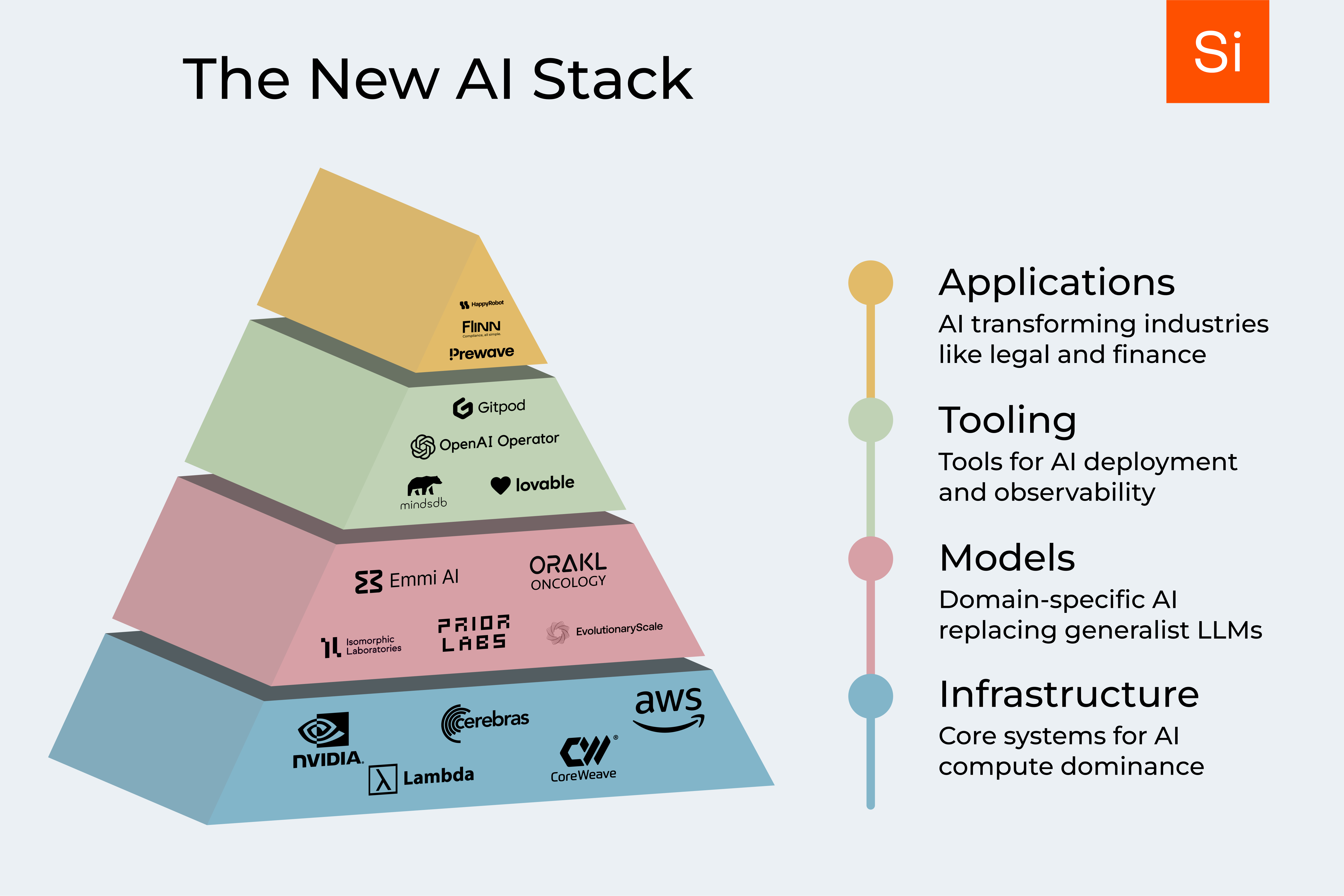

This thesis represents Speedinvest's thinking as of today, subject to change given the rapid pace of AI development. We've categorized AI investment opportunities into four layers:

- Infrastructure: The battle for AI compute dominance

- Models: Where generalist LLMs are being replaced by domain-specific AI

- Tooling: The unsung heroes of AI deployment and observability

- Applications: The transformation of entire industries from legal to finance

History suggests a portion of this paper will age like fine wine, and some parts will not. The pace of AI development is fast, and what seems cutting-edge today may look naïve next year. Our 2022 blog post "Future of AI" correctly predicted the strength of domain-tailored applications and AI-first workflows, yet we underestimated how quickly compute constraints would dominate and how agentic AI might alter software economics. But as venture capitalists, we live by the principle of strong opinions, weakly held, so we're committing our thoughts as they stand today and will continually refine our thesis as the market evolves.

Infrastructure — The Foundation of AI's Future

The AI revolution still runs on raw compute — the steam engine of our era. Without cutting-edge infrastructure, innovation stalls. Yet as basic capacity gets easier to buy, the frontier moves toward specialized silicon, sovereign-compute stacks, and edge-optimized deployments.

Compute demand is exploding, and not only for training. Inference-heavy reasoning means models such as OpenAI o1 and DeepSeek R1 linger over every token, while multimodal tasks (text-to-video, text-to-3D, robotics control) consume far more resources than text alone.

NVIDIA continues to dominate training thanks to CUDA and a mature software moat. AMD is finally winning share with MI300, and all hyperscalers now design their own chips, though only Google operates TPUs at production scale. On the inference side, Groq, Cerebras, and others pair custom silicon with self-owned data centers, sidestepping hyperscaler lock-in and proving that controlling the full stack is becoming table stakes for next-gen AI infrastructure.

AWS, Azure, and Google Cloud still lead overall, but a "neo-cloud" tier — CoreWeave, Lambda Labs, Together AI — offers GPU clusters tuned for ML at lower cost and higher performance.

Inside the racks, Data Center 2.0 is emerging. AI workloads demand higher power densities, direct-to-chip liquid cooling, and ultra-fast networks. Crusoe and Northern Data lower $/token by repurposing stranded energy and deploying immersion systems, while Phaidra applies reinforcement learning to cut cooling costs. McKinsey forecasts global data center capacity to rise at a 22% CAGR through 2030, driven largely by AI — a clear signal that infrastructure innovation is far from over.

Geopolitics is another force reshaping the stack. The race for sovereign AI, Gaia-X in Europe, China's domestic clouds, the Gulf states' mega-builds, turns product-market fit into product-policy fit, rewarding regions where energy strategy, regulation, and talent align.

A wave of chip challengers — Tenstorrent, RISC-V outfits, optical and in-memory specialists — promise cheaper, greener FLOPs. But customers ultimately buy system economics: $/token and tokens/s, not peak TFLOPs. Only solutions that cut the total cost of ownership at scale will matter.

For a seed-stage fund, competing at the foundry level is like entering Formula 1 on a bicycle. The real opportunity sits one layer up: software that squeezes more from existing hardware, deployment tools, and vertical-specific accelerators, where a few million still moves the needle.

Efficiency gains alone won't tame demand. Better chips and smarter compression lift adoption faster than they trim per-token cost, so aggregate energy use keeps climbing. Expect a bifurcation: ubiquitous low-cost models for mass tasks and premium, high-performance models for advanced workflows. As reasoning shifts to inference time, cost structures migrate from cap-ex training to op-ex inference; winning will hinge on $/token per watt rather than raw FLOPs.

Looking ahead, decentralized or federated compute (e.g., Bitfount), and software-led optimization layers — model compression, inference schedulers, dynamic sparsity — will ease hardware dependence while staying capital-efficient. These are precisely the pockets where early-stage investors can capture outsized returns without matching hyperscaler check sizes.

The Model Layer — Foundation Models Are Not Enough

Over the past few years, hyperscaler-backed AI labs have aggressively scaled foundational models in pursuit of AGI — the idea that a single, self-improving generalist model could solve every problem across domains. But that vision hasn't fully materialized. Recent developments suggest we're hitting practical limits of the current scaling laws: throwing more data and parameters at the problem yields diminishing returns. Instead, the next wave of differentiation is emerging from smarter architecture and design — advanced inference-time reasoning, domain specialization, and modular systems where smaller models are orchestrated by a central "reasoning hub." This shift opens the door for startups to build powerful, vertical-specific solutions without competing head-on with the largest labs.

Where we do see "domain-specific" models thriving is on token substrates other than text or images:

- In biotech, companies like Isomorphic Labs, Cradle, and EvolutionaryScale are training AI models on protein structures, genetic sequences, and enzyme functions, fundamentally reshaping drug discovery

- Orakl Oncology applies AI to accelerate oncology research, using predictive modeling to identify optimal cancer treatments

- Emmi AI is building models that approximate physical simulations

- PriorLabs is solving tabular data and time series with a novel transformer-based approach to Bayesian Inference

Beyond these examples, we're seeing specialized models emerge in industrial manufacturing (predicting equipment failures from sensor data), finance (analyzing transaction patterns to detect fraud), and agriculture (optimizing crop yields through soil and weather analysis). The common thread is that these models process domain-specific data types that general text models simply cannot handle effectively.

Meanwhile, with the release of OpenAI's o1/o3 and DeepSeek's R1, foundational models are post-trained with sophisticated reinforcement learning and synthetic data generation methods, and "compute scaling" is pushed to "inference time" to roll out longer chains of thought. Essentially, the AI is allowed "more time to think". This has been called approximate reasoning and lays the groundwork for agentic AI as it enables AI to generalize to never-before-seen circumstances that they encounter in the wild (for example, ordering a train ticket using a browser).

The next wave of model architectures will likely involve networks of smaller, specialized models orchestrated by a larger "parent" model, similar to how the human brain delegates tasks to specialized regions, enhancing overall efficiency and effectiveness. Over the next 18 months, we expect AI agents to shift from passive reasoning tools to active teammates — capable not only of responding to prompts but also autonomously managing multi-step workflows and even initiating actions.

The Regulatory Landscape

Regulation is becoming a defining factor in AI model development. The EU AI Act is introducing strict compliance requirements, forcing companies to rethink data handling and model transparency. This is leading to the rise of regulatory-first AI companies, which are positioning compliance as a competitive advantage.

The EU AI Act is a quintessentially European initiative, well-intended but so bureaucratic that it risks becoming an innovation speed bump. While regulation is necessary, overly rigid compliance rules could push cutting-edge AI startups to more flexible regulatory environments, particularly in the U.S. or Asia. Japan, for instance, is adopting a more innovation-friendly regulatory stance, positioning itself to attract AI model development that might be deterred by European bureaucracy. The challenge for founders will be navigating compliance without getting stuck in endless paperwork.

Future Model Developments

In the next 18 months, we expect a further rapid acceleration of reasoning AI performance as more and more labs publish their models. This will also include software innovation in distillation, quantization, post-training, and other methods that enable smaller, cheaper models to perform on par with large, expensive ones. Synthetic data will increasingly play a key role in pre-training as publicly available datasets become exhausted, challenging developers to maintain robustness and diversity of training sets.

Multimodal AI models, combining text, vision, and structured data, will become more commercially viable and be rapidly adopted in real-world applications. We expect non-traditional token substrate models for tabular data, time series, physics, chemistry, and biology to have their first breakthroughs in the enterprise. Longer-term, foundational models may even autonomously conduct scientific research and accelerate discoveries in fields such as medicine, materials science, and engineering, fundamentally reshaping how innovation occurs across industries.

Tooling — Quiet Infrastructure, Lasting Moats

Scaling AI deployment requires robust, enterprise-grade tooling. Retrieval-Augmented Generation (RAG), prompt engineering, and orchestration frameworks are evolving from experimental hacks into production-hardened infrastructure. As AI diffuses across enterprise functions, observability, testing, and debugging tools are becoming mission-critical for ensuring transparency, compliance, and reliability.

Yet many enterprises now favor in-house solutions, driven by data privacy, cost control, and the need for deep customization. This puts pressure on standalone tooling startups to differentiate clearly, either through superior UX, tight vertical integration, or ownership of proprietary feedback and evaluation loops.

When we look at successful tooling startups, we see three clear paths to building defensible businesses:

- Becoming the system of record for AI-specific data and feedback loops, similar to how Salesforce owns customer data

- Creating workflow tools that streamline specific high-value use cases like document processing or customer support

- Developing enterprise-grade platforms that abstract away complexity for specific verticals, bundling multiple tools with industry-specific templates and workflows

The winners will not just offer technical functionality but solve specific business problems.

Emerging Tooling Trends

Looking ahead, several tooling trends are set to gain strong momentum:

- MindsDB provides a machine learning platform that integrates AI models directly into databases, enabling enterprises to use AI-driven analytics without complex integrations

- Clusterfudge solves AI model orchestration, while GitPod becomes the backbone of AI coding agents

- The emergence of interoperability standards across AI tooling—allowing different components to work seamlessly together—will create opportunities for startups that can bridge gaps between various AI systems and legacy enterprise software

The convergence of AI observability and security will become critical as enterprises demand more visibility into AI decision-making and compliance with evolving regulations. Additionally, vibe coding tools like Lovable and Cursor will go mainstream, expand accessibility to software engineering, and lead to a Jevons paradox moment for apps, games, and SaaS.

Evaluation and benchmarking of AI models remains a critical but largely unsolved challenge, given the diverse and subjective nature of AI tasks across different industries. New tooling companies that solve for this evaluation layer comprehensively will have significant market opportunities.

The Rise of Autonomous Agents

Perhaps most importantly, autonomous AI agents like OpenAI Operator, Deep Research, and many open-source agentic AI frameworks will move beyond experimental phases, handling complex multi-step workflows in startup and enterprise environments. This will shift the focus of tooling from individual agent capabilities to comprehensive frameworks for agent-to-agent orchestration, performance monitoring, and secure authentication.

Agents will, therefore, be the central topic for AI tooling - e.g., enabling collaboration between agents or building evaluation & feedback systems - in a quest to build a reliable support system for agents. The emergence of frameworks for managing agent interactions—handling authentication, coordination, secure credentialing, and payments—will be essential prerequisites for the widespread adoption of fully autonomous agentic systems.

As autonomy increases, behavioral integrity becomes a challenge. Enterprises will need to ensure agents not only work but also align with organizational norms, compliance needs, and product expectations. Thus, new standards and governance tools for autonomous agent behavior will become a critical investment area in the AI tooling ecosystem.

AI Applications — Rebuilding Real-World Workflows

AI-native software isn't just augmenting legacy systems — it's rebuilding entire workflows from the ground up. But winning at the application layer requires more than model access or clever interfaces. It hinges on deep domain expertise, intimate customer understanding, and the ability to rethink workflows from first principles.

We prioritize vertical-specific platforms with clear, measurable ROI, especially in complex, regulated industries like legal, finance, healthcare, and industrial automation, where general-purpose tools fall short and data moats are defensible. Flank AI is a great example: they're building AI-native infrastructure for legal teams by streamlining compliance, vendor assessments, and risk workflows. In these sectors, AI-native apps don't just automate — they redefine what's possible.

AI is also extending into deeper operational layers. Prewave, for instance, helps global manufacturers and supply chain teams anticipate disruptions by analyzing massive volumes of unstructured external data. In healthcare, Flinn AI supports providers by simplifying compliance across complex regulatory frameworks—one of the largest administrative pain points in the sector. These real-world deployments are now showing tangible ROI, as demonstrated by companies like Klarna, whose AI-driven customer support agent effectively replaced hundreds of human reps, significantly boosting operational efficiency.

Beyond Software: Disrupting Consultancy

This shift doesn't just stop at software. AI is beginning to disrupt the human-capital-heavy consultancy model itself. Think less slide decks, more software. Palantir and others are showing how AI-powered decision layers can automate what previously required armies of analysts.

We expect more service businesses to emerge that are "software-wrapped consulting" from day one, especially in high-spend verticals like insurance, healthcare, and financial reporting. This transition—"services-as-software"—expands the addressable market well beyond traditional SaaS into a broader $4 trillion global professional services industry, automating repetitive tasks across roles in legal, HR, customer support, and beyond.

Physical World Applications

And while much of the narrative still centers on white-collar knowledge work, the physical world is following. In manufacturing, construction, logistics, biotech, and materials science, AI is set to increase resource efficiency, enable automated quality control, and reduce reliance on low-cost labor.

Robotics, driven by end-to-end neural networks, is entering a renaissance, though substantial challenges in data collection and simulation realism remain critical hurdles to broader deployment. As a result, we may see a wave of reshoring and new industrial competitiveness in high-wage regions, where software starts to eat the real economy. Ultimately, AI adoption across physical industries will significantly reshape competitive dynamics, potentially reversing decades-long outsourcing trends and fostering new ecosystems of high-tech manufacturing.

What Lies Ahead?

The coming years will see compute economics increasingly favoring operation-intensive inference tasks. We predict rapid commercialization of multimodal models, widespread adoption of autonomous agents, and at least three agent orchestration platforms reaching $100M ARR by 2027. Yet, this won't merely simplify jobs—it will intensify workloads, demanding higher productivity and significantly reshaping professional roles.

Companies that master end-to-end AI systems tailored to actual customer needs will capture large verticals and become a danger to the horizontal SaaS-enterprise offerings of the 2010s. In parallel, foundational model providers will increasingly seek vertical integration, branching out into infrastructure layers or developing end-user applications, as commoditization pressures reduce standalone model profitability.

Transforming Data and Interfaces

AI is fundamentally changing how we work with data. Suddenly, unstructured data—previously seen as too chaotic or difficult to process—becomes an accessible and valuable asset. AI enables businesses to extract insights from text, audio, and images in ways that were previously impossible, turning qualitative information into structured, actionable intelligence.

Additionally, AI is reshaping UX/UI paradigms, allowing for more flexible, natural language-driven interfaces that reduce friction and lower the barriers to adoption for non-technical users. Finally, voice AI is now essentially solved for all languages, which will further accelerate the adoption of AI. We see huge potential for companies such as HappyRobot that use sophisticated AI under the hood but present themselves to customers in their own preferred interface, such as email, text, or phone calls.

The Productivity Paradox

AI may not replace most jobs, but it will almost certainly overload many of them. Fears of mass unemployment often overlook the historical trend: technology tends to create more work, not less. In the near term, AI is more likely to intensify work than to eliminate it:

- Developers may be expected to ship 10x more code

- Legal teams will need to process 100x more documents

- Sales reps will be pushed to follow up on exponentially more leads

AI-enhanced productivity will raise expectations, not necessarily reduce workloads. This productivity-driven expectation shift will push enterprise spending on AI even higher, as companies aim to keep pace with competitors who successfully integrate AI. This dynamic gives rise to a new skill gap: not between experienced and junior workers but between those who can effectively manage AI teammates and those who can't.

Redefining Professional Excellence

Beyond technology, AI is redefining what excellence looks like across functions like engineering, marketing, and sales. Traditional expertise is becoming less critical than the ability to leverage AI tools effectively. A developer who knows how to use AI-driven coding assistants can be as productive as an entire team five years ago.

The same applies to GTM functions—marketers who master AI-powered analytics, content generation, and campaign automation will dramatically outperform those who rely on legacy playbooks. Professional roles like enterprise sales teams are more likely to employ tools that help to 10x their existing workforce.

Across all functions, expertise in AI tools and workflows is becoming a core competency, drastically changing hiring criteria and talent development strategies across industries. This shift means that when we assess founders, we increasingly look not just at their experience but at how well they embrace and integrate AI-driven productivity enhancers.

Bottom Line: We're All Riding the AI Wave of Opportunity and Uncertainty

Speedinvest remains committed to identifying and backing the future winners in this evolving landscape. The AI revolution is moving fast, faster than anyone can fully predict. Some of today's bold bets will turn into industry-defining successes, while others will serve as cautionary tales. That's the nature of venture investing, and frankly, that's what makes it fun.

We'll continue to adapt, challenge our own assumptions, and back the founders who don't just ride the AI wave but shape where it's heading next. Clarity will remain a moving target, and working with outstanding entrepreneurs remains the best way to stay ahead of the curve.

.svg)